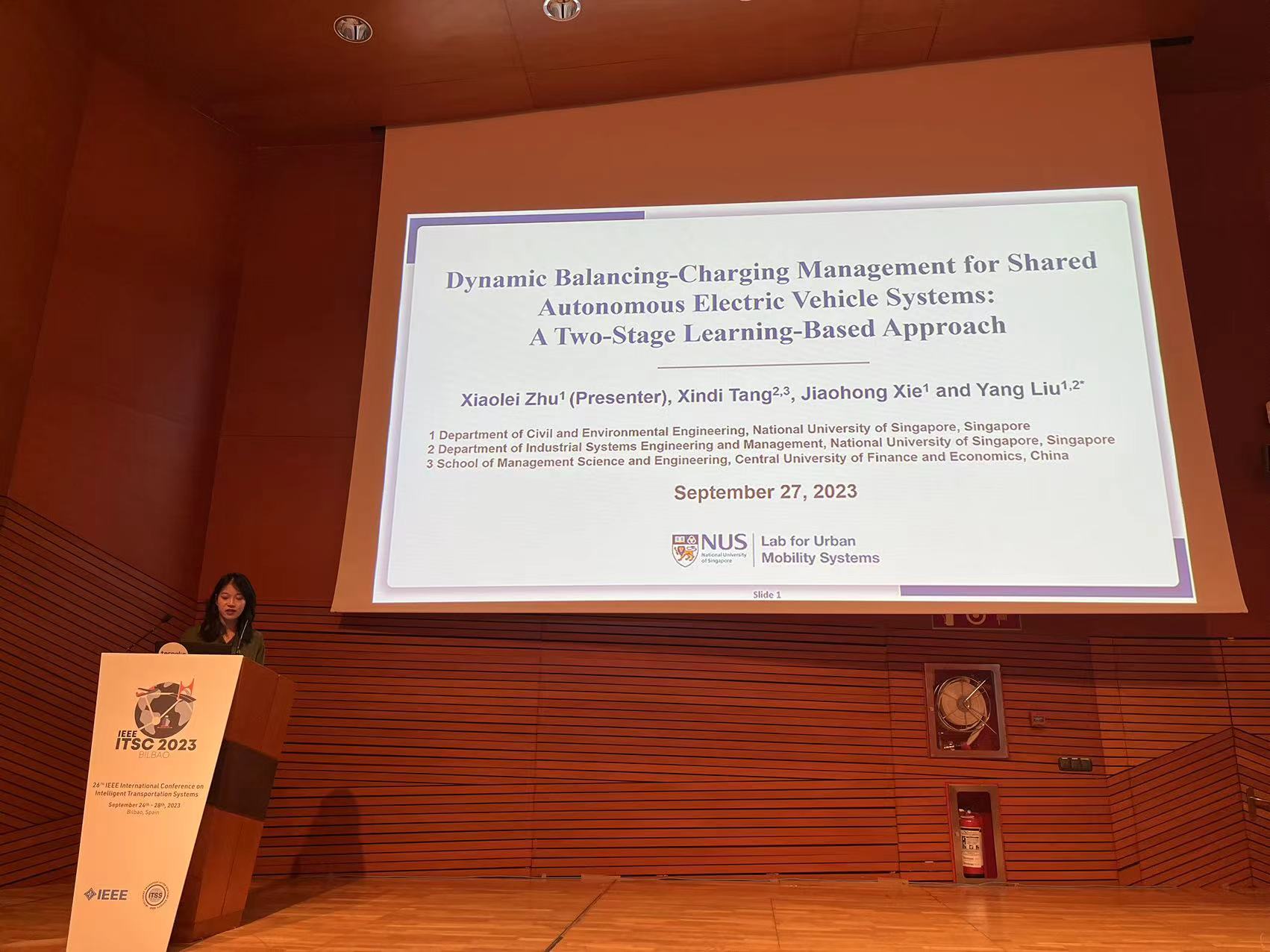

Authors: Xiaolei Zhu, Xindi Tang, Jiaohong Xie and Yang Liu*

Abstract: The proliferation of car-sharing systems, a key component of sustainable urban mobility, has significantly contributed to the shift towards green transportation modes. However, balancing supply and demand in large-scale Shared Autonomous Electric Vehicle Services (SAEVSs) remains a critical yet challenging task, particularly considering the stochastic spatio-temporal dynamics and limited charging resources. To jointly optimize fleet management and charging decisions, we propose a two-stage learning-based approach that integrates the foresight capabilities of Deep Reinforcement Learning (DRL) techniques with the precision of optimization methods. We develop a multi-agent DRL model, acting as a “Manager” that generates prescient grid-level Balancing-Charging (BC) strategies, guiding the relocation and charging decisions. Furthermore, we customize the space-time-battery network flow model to make optimal vehicle-level decisions as a “Worker”, which follows the far-sighted guidance from the Manager to make high-quality and real-time decisions. Through extensive experiments conducted on a city-scale SAEVS, we demonstrate our proposed approach significantly outperforms benchmark models in terms of both system-level profit and service quality. Our two-stage approach also lays a methodological basis for further exploring the integration of DRL and optimization techniques, with the aim of enhancing decision-making capabilities in urban mobility systems.

Key Words: car sharing, shared autonomous electric vehicle, charging management, online fleet management, multi-agent deep reinforcement learning, space-time-battery network